The $700 Billion AI Infrastructure Supercycle

Five companies are about to spend more than $700 billion in a single year on artificial intelligence infrastructure.

To put that in perspective, that's roughly 2.2% of US GDP. It represents the largest corporate capital deployment in history, rivaling the combined scale of the interstate highway system, rural electrification, and nationwide broadband buildouts of the 20th century.

Amazon has committed to $200 billion. Microsoft is on pace for roughly $145 billion. Google is guiding between $175 and $185 billion. Meta will spend up to $135 billion. Oracle is projected at $75 billion.

This represents a 66% year-over-year increase from 2025. The combined total has quadrupled in just three years, rising from $153 billion in 2023 to an estimated $695 billion in 2026.

Most investors see this and assume the AI boom is accelerating with no signs of slowing down.

The data reveals a more complicated picture.

The Scale of the GPU Demand Surge

NVIDIA reported $57 billion in quarterly revenue. That beat expectations by over $2 billion. Their Q4 guidance of $65 billion suggests 66% year-over-year growth in a business already doing nearly a quarter-trillion dollars in annual revenue.

The semiconductor industry as a whole is approaching $1 trillion for the first time in history. The World Semiconductor Trade Statistics group projects $975.5 billion in 2026, up 26.3% year-over-year.

These numbers support the thesis that hyperscalers are in an arms race to build AI capacity. Whoever controls the infrastructure will control the AI economy.

However, capital expenditure is now consuming nearly 100% of hyperscaler operating cash flow.

For the past decade, these companies spent around 40% of their cash flow on infrastructure. Today, that figure has hit close to 100%. Every dollar of free cash flow is being redirected back into data centers, GPUs, and power systems.

The spending represents a full-scale bet on a future that has not yet arrived.

Join Stock Talk Insiders Trading Club

This is a community I am personally in and pay for.

The lead investor achieved over 500% returns last year (image above) and posts all of his research, does teaching workshops, and answers questions. It’s great.

If you’re interested, Join Here.

The Return on Investment Question

The question is not whether AI infrastructure spending is massive. The question is whether this spending can generate adequate returns before the assets depreciate.

The case for continued spending rests on three factors.

Supply constraints validate demand. Hyperscalers have $1.63 trillion in committed customer contracts. NVIDIA's GPUs are sold out 12 to 18 months in advance. High-bandwidth memory (HBM) is completely sold out through 2026 across all three major producers.

The Jevons Paradox applies. Cheaper AI leads to more AI consumption, not less spending. NVIDIA's new Rubin platform cuts training requirements by 4x and inference costs by 10x. Lower costs should accelerate adoption and drive even more infrastructure demand.

Enterprise adoption is still early. AI workloads today are dominated by hyperscalers training foundation models. The enterprise migration has not yet happened. When it does, revenue should catch up to capex.

The opposing view is straightforward. What if the monetization does not materialize on the expected timeline?

The Power Infrastructure Constraint

Beyond the ROI question, there's a second constraint emerging that could limit how fast this buildout can actually scale. Power infrastructure is becoming a bottleneck.

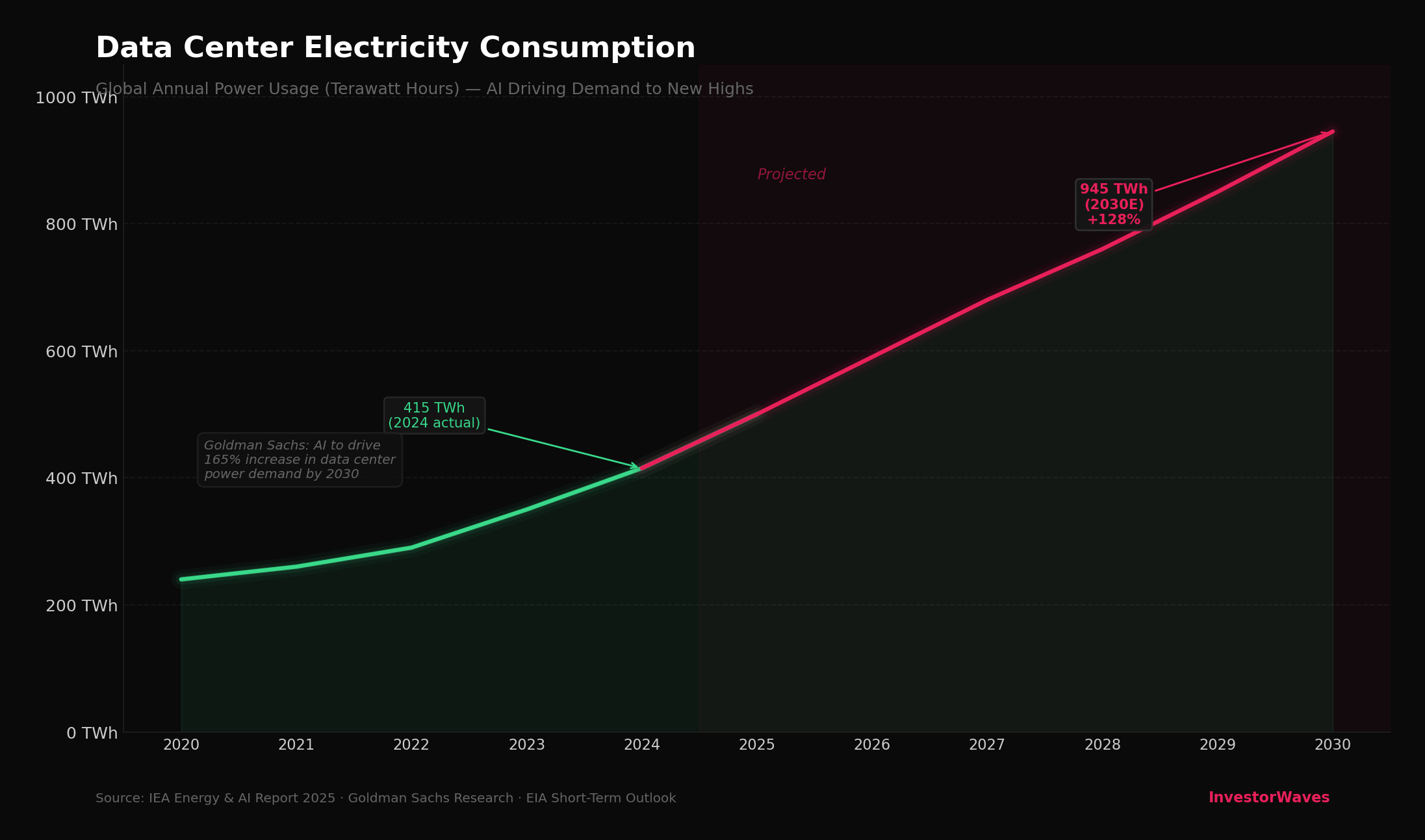

Global data center electricity consumption was 415 terawatt hours in 2024. The International Energy Agency projects 945 terawatt hours by 2030 in their base case. That represents a 128% increase.

Goldman Sachs estimates AI will drive a 175% increase in data center power demand by 2030.

Data centers consumed about 4.4% of US electricity in 2023. By 2028, the Department of Energy projects that figure could reach anywhere from 6.7% to 12%, depending on AI adoption rates.

That's faster growth than any other sector of the economy. The grid infrastructure does not exist yet to support it.

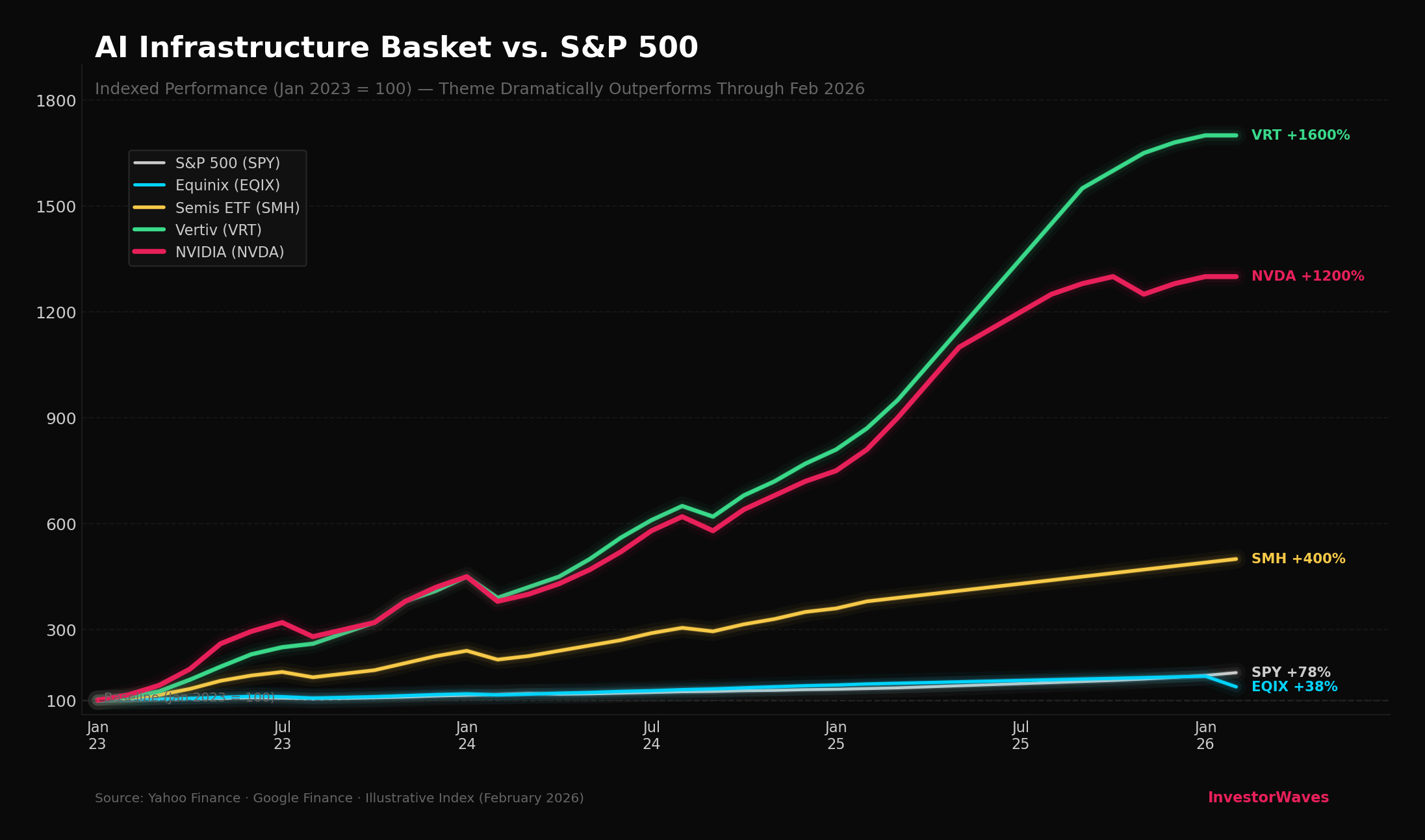

Companies like Vertiv make data center cooling and power systems. They saw Q4 organic orders growth of 252%. The stock hit an all-time high of $248.51 on February 11th.

This represents more than just a supply chain opportunity. It's a fundamental constraint on how fast AI infrastructure can scale.

If power capacity cannot keep pace with GPU deployment, the $700 billion spending wave gets bottlenecked at the grid level.

The $2.5 Trillion AI Spending Forecast

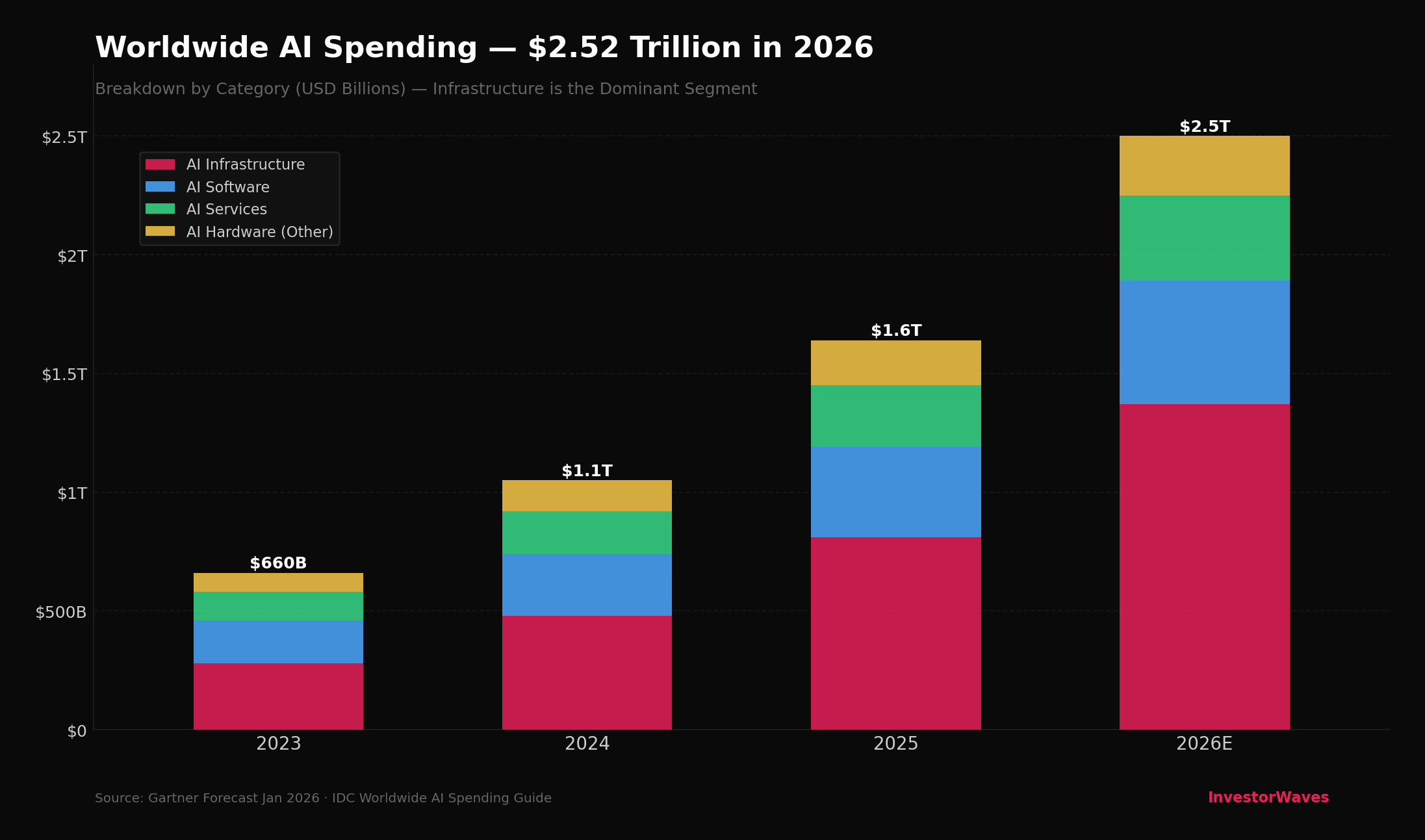

Gartner forecasts that worldwide AI spending will hit $2.52 trillion in 2026. AI infrastructure represents $1.37 trillion of that total, or 54%. This includes data centers, compute, networking, storage, and power.

This is why every major investment bank has identified AI infrastructure as the top thematic investing opportunity for 2026.

The value chain has five distinct layers. Each layer has different risk-return profiles.

Hyperscalers (Amazon, Microsoft, Google, Meta, Oracle)

GPUs and AI Chips (NVIDIA, Broadcom, AMD, Marvell)

Memory (Micron, SK Hynix, Samsung)

Data Center REITs (Equinix, Digital Realty)

Power and Cooling (Vertiv, Eaton, Constellation Energy)

For broad exposure, the VanEck Semiconductor ETF (SMH) remains the most popular vehicle. It's up roughly 400% on a total return basis since January 2023.

What the Data Is Signaling Now

Can this level of spending generate adequate returns?

History offers a useful parallel. During the dot-com boom, telecom companies spent over $500 billion building out fiber optic networks. The infrastructure was real. The demand projections were real. The monetization timeline was wrong. Most of those companies went bankrupt despite building essential infrastructure.

The difference this time is balance sheets are stronger. Amazon, Microsoft, and Google have the financial cushion to absorb lower returns for longer.

That does not guarantee the returns will materialize.

NVIDIA reports earnings on February 25th. That's the next major catalyst.

After that, GTC conference runs March 16 to 19. NVIDIA will lay out the roadmap for the next generation of chips.

The AI theme is still alive, but the money is constantly rotating to different sub themes within AI. Finding the stocks in the theme, and then buying those stocks at the right prices, that's the hard part.

-Pierce, InvestorWaves.com

Did someone forward this email to you? Sign up here for our newsletter.

Note: This article does not provide investment advice. Any stocks mentioned should not be taken as recommendations. Your investments are solely your decisions.